The GPU industry has been advancing at a blistering pace, but few innovations have made as immediate and transformative an impact as the NVIDIA H200 Tensor Core GPU. At the heart of this leap forward is one crucial specification: 141 GB of ultra-fast HBM3e memory. This isn’t just a bigger number—it represents a fundamental shift in how modern AI models, high-performance computing (HPC), and data-intensive workloads can be powered.

In this post, we’ll break down why the h200 specs are such a big deal and how they change the landscape for developers, enterprises, and researchers alike.

What Is HBM3e and Why It Matters

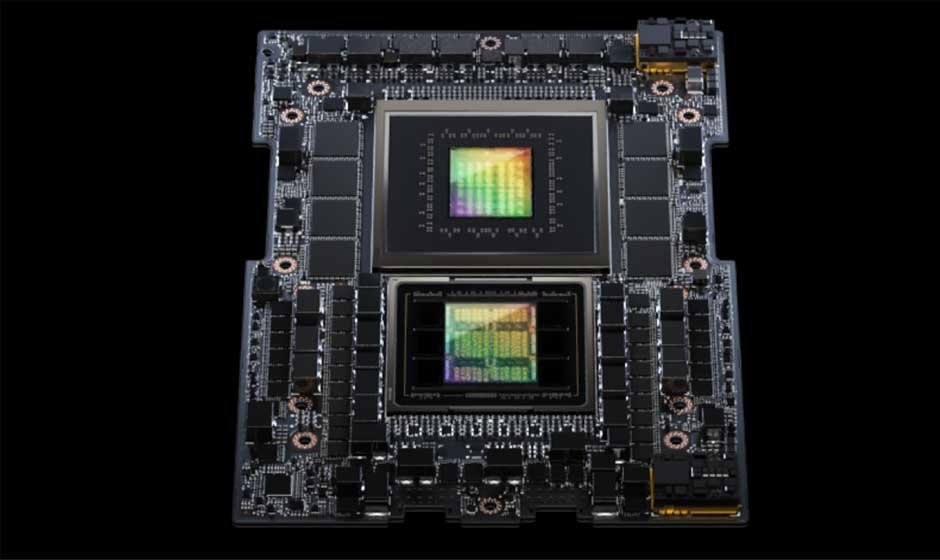

HBM3e (High Bandwidth Memory 3e) is the latest evolution of stacked memory technology designed for maximum throughput and energy efficiency. Unlike traditional GDDR memory, HBM is positioned right next to the GPU die, drastically reducing latency and boosting bandwidth.

The H200’s 141 GB of HBM3e memory provides nearly 5 TB/s of bandwidth, allowing it to move data in and out of the GPU at speeds previously unimaginable. For comparison, the H100—already considered a powerhouse—offered up to 80 GB of HBM3 memory. The jump to 141 GB means workloads that previously needed multiple GPUs can now often be handled by just one.

Why 141 GB Changes the Game for AI

The explosive growth of AI models like GPT-4, Stable Diffusion, and LLaMA has highlighted a critical bottleneck: memory capacity. Large language models (LLMs) and foundation models require tens to hundreds of gigabytes of parameters and intermediate states.

With the H200:

- Bigger models fit in a single GPU: Researchers can train or fine-tune models with billions of parameters without relying as heavily on multi-GPU setups.

- Faster inference:Higher memory bandwidth reduces latency when serving AI models, improving real-time applications like chatbots, voice assistants, and generative media.

- Lower scaling complexity:Fewer GPUs needed per workload means reduced networking overhead, lower power consumption, and simplified infrastructure management.

In short, 141 GB of memory unlocks the full potential of today’s cutting-edge AI models, while paving the way for the even larger models of tomorrow.

HPC, Scientific Workloads, and Enterprise Applications

The H200 isn’t just for AI researchers—it has broad implications across industries:

- Climate modeling & physics simulations:These workloads demand massive memory and bandwidth to simulate real-world phenomena at high fidelity. With 141 GB of HBM3e, simulations can run larger datasets per GPU, improving accuracy and efficiency.

- Healthcare & drug discovery:Molecular dynamics and genomics research benefit from faster memory throughput, cutting down discovery timelines.

- Financial services: Real-time risk modeling and fraud detection can be run on larger datasets without offloading computations to slower storage tiers.

For enterprises, this translates to shorter training cycles, reduced infrastructure costs, and faster time-to-market for AI-driven products.

The Competitive Advantage

While competing GPUs are still catching up, the NVIDIA H200 is positioned as the go-to accelerator for organizations that want to stay ahead. Cloud providers, hyperscalers, and Fortune 500 companies are already preparing deployments that will leverage its memory advantage for both training massive AI models and deploying them at scale.

From a market perspective, this makes the H200 not just a product, but a strategic differentiator. The ability to consolidate workloads, reduce cluster complexity, and accelerate AI development cycles will set early adopters apart.

Final Thoughts

The NVIDIA H200’s 141 GB of HBM3e memory isn’t just a spec bump—it’s a paradigm shift. By combining unprecedented memory capacity with ultra-fast bandwidth, it removes one of the most significant bottlenecks in AI and HPC.

For developers, it means the freedom to innovate without compromise. For enterprises, it means faster results with lower infrastructure overhead. And for the industry as a whole, it signals the next stage in the AI revolution.

If the H100 was the GPU that defined 2023, the H200 is the GPU that will redefine 2024 and beyond.